Ensemble Model Strengths...And some weaknesses

Ensemble modeling is a combination of models that work together in generating an even stronger predictive model. There are different modeling techniques that can be combined including; decision trees, neural networks, random forests, support vector machines and others. Ensemble algorithms that are used to increase performance of a model or decrease the probability of selecting a poor one include bagging, boosting and stacking.

Bagging (Bootstrap Aggregating) is one of the recent and ironically earliest ensemble techniques developed in 1996. This algorithm train and selects the strong classifiers on subsets of data to reduce variance error. Some of the strengths include; robust performance on outliers, reduction of variance to avoid over-fitting, requires little additional parameter tuning, accommodates high non-linear interactions. One of bootstrap aggregating weaknesses are the more complex the model gets, users lose transparency and interpret-ability.

Boosting is like bagging but has more weight on weak classifiers. Through each iteration of classifications, the weak classifiers are given more weight towards to the next classification phase in order to strengthen their probability of being classified correctly, until a stopping point it reached. This can be viewed as course-correcting by energizing the necessary data weights that need an extra boost. This algorithm in-turn optimizes the cost function but some of the weaknesses include; over-fitting, outlier influence, revision on iteration stopping point, and lack of transparency due to the complexity.

Known as the least understood ensemble technique, stacking creates ensembles from a diverse group of strong classifiers. Diversity is important while creating ensemble models because it allows stronger learners from different regions to combine their ability to reduce risk of misclassification. Stacking utilizes different tiers of classification training (Vorhies, 2016). If Tier 1 has feature spaces that were misclassified, then Tier 2 will use the misclassified areas to correct that behavior in the next phase. The greatest weakness of this type of ensemble similar to the others algorithms above, being that it lacks transparency in reaching a metadata classifier that adjusts for its errors.

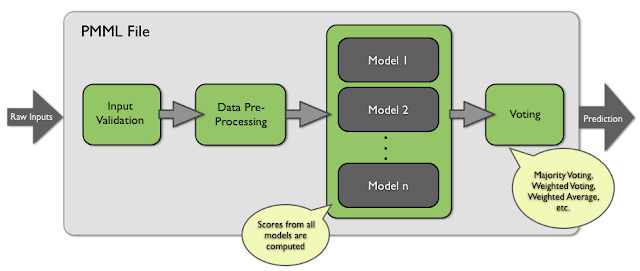

Figure 1- Different individual predictors are combined to create an optimal decision boundary.

References:

Vorhies, W. (2016, May 25). Want to Win Competitions? Pay Attention to Your Ensembles.Retrieved July 2017, from datasciencecentral.com: http://www.datasciencecentral.com/m/blogpost?id=6448529%3ABlogPost%3A428995

Comments

Post a Comment